Introduction to AI in Music Composition

The integration of artificial intelligence (AI) into various creative domains has transformed how we approach artistic expression, with music composition being one of the most intriguing areas of exploration. Neural networks, a subset of AI, have shown remarkable potential in generating music that not only mirrors human creativity but also introduces new dimensions to sound. Over the past decade, advancements in machine learning algorithms and computational power have facilitated a significant evolution in AI’s role within the music industry, leading to hybrid forms of collaboration between humans and machines.

Neural networks are designed to mimic the way humans learn, training on vast datasets that include compositions from various genres, periods, and cultural backgrounds. This exposure enables them to identify patterns and structures inherent in music, which they can then reinterpret and recreate. Notable AI projects, such as OpenAI’s MuseNet and Google’s Magenta, illustrate the capabilities of these systems, producing compositions that range from classical pieces to contemporary pop songs. The ability of neural networks to generate original melodies, harmonies, and rhythms raises important questions about creativity, artistry, and the nature of musical expression.

The rise of AI in music composition not only highlights the technological advancements in sound creation but also invites a debate on the superiority of machine-generated music compared to that of human composers. While some argue that machines lack the emotional depth and contextual understanding that human musicians possess, others believe that AI can augment the creative process, offering novel perspectives that a single human composer might overlook. As we delve deeper into this topic, it becomes essential to examine whether neural networks can truly compose better music than humans or if they merely serve as sophisticated tools for human creativity.

Understanding Neural Networks

Neural networks are a subset of machine learning models inspired by the biological neural networks that constitute animal brains. They consist of interconnected nodes or neurons that process information through weighted connections. By simulating the way human brains learn, neural networks can perform complex tasks, including music composition. The architecture of these networks can vary significantly, including architectures such as feedforward, recurrent, and convolutional neural networks, each suited for different types of data and applications.

At the core of neural networks lies the concept of deep learning, which refers to the use of multiple layers of neurons (hence “deep”) to facilitate the processing of inputs. Each layer transforms the input data, learning increasingly abstract features at each stage. This layered approach allows neural networks to capture intricate patterns in datasets, making them powerful tools for tasks such as image recognition, natural language processing, and music generation.

Machine learning, broadly, is the discipline that focuses on the development of algorithms capable of improving their performance as they are exposed to more data over time. Neural networks exemplify this concept, as they require vast amounts of data to train effectively. In the context of music composition, a neural network might be trained on numerous musical works to develop an understanding of various styles, structures, and genres, ultimately enabling it to create original compositions that mimic the nuances of human-created music.

Furthermore, the training process involves two main phases: the feedforward phase, where input data is passed through the network to generate outputs, and the backpropagation phase, where the output is compared to the desired result and adjustments are made to reduce the error. This iterative approach enhances the model’s accuracy, ensuring that the neural network can produce music that resonates with human listeners.

Historical Context of Music Composition

The history of music composition is deeply intertwined with cultural and social transformations, showcasing humanity’s evolving creative expression. From prehistoric times when music likely emerged as a form of communication and ritual, the journey of musical innovation has been shaped by various styles, genres, and technological advancements. Ancient civilizations, such as the Greeks and Romans, began to formalize music theory, leading to the creation of scales, modes, and notational systems that became the foundation of Western music. This systematic approach laid the groundwork for future composers, allowing for greater complexity and artistic expression.

During the Medieval and Renaissance periods, the role of music shifted dramatically as polyphony introduced layers of harmony, expanding the emotional and aesthetic possibilities of composition. Composers such as Palestrina and Josquin des Prez established techniques that allowed for intricate interweaving of melodic lines, setting the stage for future developments. The Baroque era followed, with figures like J.S. Bach and Handel, who emphasized contrast and ornamentation, further pushing the boundaries of musical form and function.

The Classical and Romantic periods ushered in significant stylistic changes, prioritizing emotional expression and individual creativity. Composers such as Mozart and Beethoven sought not only to entertain but to convey profound sentiments, establishing a deep emotional connection with their audiences. The uniqueness of human composition lies in the ability to infuse personal experience and cultural context into music, qualities that AI struggles to replicate fully. As the 20th century brought forth diverse movements like jazz, rock, and electronic music, the evolution of composition became a reflection of societal shifts. In exploring AI-generated music, it is essential to consider this historical backdrop, as it defines the framework of understanding what makes human-created music distinctive.

Notable Examples of AI-Generated Music

In recent years, the capabilities of neural networks in the realm of music composition have become increasingly recognized and appreciated. One prominent example is OpenAI’s MuseNet, which utilizes deep learning techniques to generate original compositions across multiple genres. MuseNet can analyze and mimic various musical styles by using a vast dataset of compositions. This allows it to not only create new pieces but also to collaborate with human artists, enhancing the creative process through machine assistance.

Another significant advancement in AI-generated music is Google’s Magenta project. Magenta employs machine learning models to create a wide range of musical content, including melodies, harmonies, and even complete songs. By focusing on training neural networks with music theory, Magenta has produced works that are not only technically proficient but also emotionally resonant. The project serves as a platform for artists and developers to explore the intersection of technology and creativity.

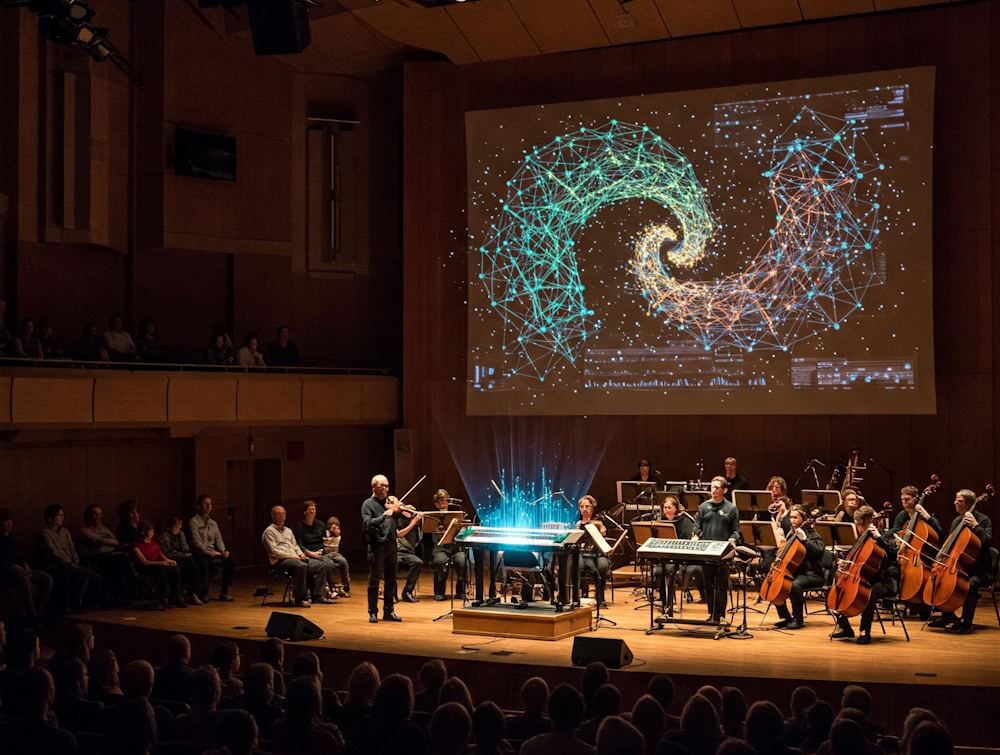

Moreover, several noteworthy performances and projects have helped to showcase the potential of AI in the music industry. For instance, the collaboration between AI systems and well-known musicians has led to the creation of innovative pieces that surprise and engage audiences. Events such as the “AI Music Festival” have highlighted the unique soundscapes generated by neural networks, illustrating that AI can serve as a legitimate collaborator rather than simply a tool. This growing acceptance of AI in music composition indicates a notable shift in how music is created, appreciated, and understood.

Overall, the remarkable examples of AI-generated music not only demonstrate the capabilities of advanced neural networks but also invite ongoing exploration into the relationship between technology and artistic expression. As these systems continue to evolve, they promise to transform the landscape of music composition, opening new avenues for creativity and innovation.

The Strengths of AI in Music Composition

The application of artificial intelligence in music composition presents a range of notable strengths and advantages that can greatly enhance the creative process. One of the primary benefits of utilizing AI in this field is its efficiency. Algorithms can analyze and generate music at speeds unattainable by human composers. This rapid production capability allows for the exploration of numerous compositions in a fraction of the time it would take a human musician. Consequently, this efficiency can lead to the generation of ideas and concepts that might otherwise remain unexplored.

Moreover, AI’s inherent ability to analyze vast musical datasets is a significant strength. By examining an extensive range of musical styles, genres, and structures, AI systems can identify patterns and develop new compositions that draw upon diverse influences. This analysis helps generate creative alternatives that human composers may not typically consider, leading to innovative musical works that blend elements from various traditions and styles into cohesive pieces. Such diversity not only enriches the body of music available but also challenges conventional genre boundaries.

Furthermore, AI has the ability to produce complex musical structures. Advanced systems can understand intricate relationships between melody, harmony, rhythm, and dynamics, enabling the creation of sophisticated arrangements. These capabilities allow AI-generated compositions to resonate with listeners on multiple levels, as they can incorporate elements traditionally associated with expert human composers. This alignment of advanced computational functionality with artistic expression positions AI as a valuable collaborator in the composition process.

Incorporating AI into music creation ultimately reveals the potential for significant artistic advancement, expanding human perspectives in music composition. By leveraging the strengths of AI, composers can push the boundaries of their creativity, creating works that are not only innovative but also grounded in a comprehensive understanding of musical theory and practice.

Limitations of Neural Networks in Music Creation

While neural networks have made significant advancements in the realm of music composition, it is crucial to acknowledge their inherent limitations. One of the most prominent challenges is the lack of emotional depth that often characterizes human-created music. Humans possess the ability to infuse their compositions with personal experiences, emotions, and cultural contexts, leading to a rich tapestry of sound that resonates with listeners on a deeper level. In contrast, neural networks rely on vast datasets to generate music, which may result in technically proficient pieces that lack the emotional connection often found in human compositions.

Furthermore, the context and cultural nuances that influence human music creation are often lost in the output of these algorithms. Music is deeply rooted in cultural traditions and social settings, elements that neural networks struggle to comprehend. For example, a human composer might draw from their cultural background or current societal issues to create a piece that reflects these themes. On the other hand, a neural network generating music lacks the subjective experience needed to understand and convey these nuances, which can lead to compositions that may sound generic or out of touch with real-world sentiments.

Moreover, the argument surrounding the definition of ‘better’ music is complex. Many might equate better music with technical proficiency and complexity; however, the essence of music often transcends these metrics. Human intuition and creativity allow composers to break rules and innovate in ways that neural networks cannot easily replicate. While neural networks can assist and augment the music creation process, they still have significant limitations when it comes to understanding and conveying the emotional and cultural depths associated with music. This limitation raises the question of whether the results achieved by artificial intelligence can ever truly compete with the human touch in the realm of music composition.

Comparative Analysis: AI vs. Human Composers

The evolving landscape of music composition has increasingly placed artificial intelligence (AI) and human ingenuity in direct comparison. AI-generated music is not only a technological marvel but also a subject of debate regarding its creativity and originality as compared to human composers. Musicians and composers have voiced strong opinions about the merits and shortcomings of both approaches. This analysis aims to elucidate these perspectives while exploring the themes of creativity, originality, and expressiveness in musical compositions.

Creativity, traditionally regarded as a hallmark of human expression, is often questioned in AI-generated pieces. Critics argue that while machines can execute algorithms to create melodies or harmonies, they lack the emotional depth that human experiences imbue into musical works. Human composers draw from personal experiences, cultural contexts, and emotional nuances, elements that AI struggles to replicate. However, proponents of AI posit that the technology can generate innovative sounds and combinations previously unimagined, thus redefining creativity in music.

Originality is another critical aspect where AI and human compositions diverge. Human artists are often celebrated for their distinct styles and idiosyncratic influences, shaping their unique sonic identities. In contrast, AI tends to generate compositions based on pre-existing data sets, raising the question of whether AI can truly innovate or merely remix existing ideas. This reliance on historical data presents challenges to originality but can also serve as a foundation for new forms of musical expression if approached thoughtfully.

When evaluating music quality, a combination of qualitative and quantitative metrics is employed. While quantitative measures can assess aspects like melody structure and harmonic complexity, qualitative assessments take into account emotional resonances and thematic depth. The interplay between these evaluation methods underscores the intricate nature of music, making it imperative to consider both human and AI contributions holistically. In conclusion, the comparative analysis of AI-generated music and human compositions reveals a dynamic exchange that continues to challenge the definitions of creativity and originality within the musical landscape.

Collaborative Opportunities: Humans and AI

The intersection of technology and creativity has yielded fascinating opportunities in music composition through the development of AI. Rather than viewing artificial intelligence as a competitor, many artists are beginning to embrace AI as a collaborator that can augment and enhance their creative processes. This dynamic interplay not only broadens the horizons of composition but also fosters innovative approaches in music creation.

Numerous case studies illustrate how artists have successfully integrated AI into their workflows. One prominent example is the collaboration between electronic artist Holly Herndon and her AI “baby,” Spawn. This project showcases Herndon’s belief that AI can serve as a partner in creativity, allowing her to explore new sonic textures and compositions. The inclusion of AI allowed her to expand her artistic range while still maintaining her unique voice, highlighting that the blend of human artistry and machine learning can generate remarkable musical experiences.

Similarly, in the realm of classical music, renowned composer and conductor Eric Whitacre utilized an AI system to assist in orchestrating pieces. This collaboration led to the creation of new works that incorporated AI-generated melodies while staying true to Whitacre’s artistic vision. The blend of human intuition and AI’s analytical capabilities has thus energized the realm of classical composition, proving that together they can achieve results unattainable by either entity alone.

The potential for collaboration extends beyond individual projects, as platforms that facilitate partnerships between musicians and AI continue to emerge. Companies are developing tools that allow composers to interact with AI in real-time, guiding its output while benefiting from its vast analytical capabilities. This synergy can lead to a more expansive understanding of music theory and composition techniques, offering unprecedented opportunities for learning and creativity in the music industry. As we move forward, the exploration of collaborative opportunities between humans and AI promises to reshape not only how music is composed but also how it is experienced.

Future Prospects of AI in Music Composition

The rapidly advancing field of artificial intelligence (AI) suggests a promising future for AI in music composition. As neural networks continue to develop, they may surpass the current limitations seen in music generation. Emerging technologies, such as improved machine learning algorithms and enhanced data processing capacities, will enable neural networks to understand complex musical structures, styles, and genres more intricately. This deepened understanding could lead to AI systems that not only emulate existing musical traditions but also create innovative compositions that resonate with human emotions in unprecedented ways.

One significant area of development lies in the ability of AI to collaborate with human composers. Future advancements may allow these systems to function as creative partners rather than mere tools. Such collaborations could yield a variety of musical styles and genres, pulling from extensive databases of existing works to inspire original pieces. The potential for this synergy suggests a transformative shift in the music industry, where AI-generated compositions might find their place alongside traditional human artistry.

As the music landscape evolves, it is essential to consider the societal implications of AI in composition. With neural networks taking on a more prominent role, concerns regarding authenticity and originality may arise. Questions about the value of human creativity versus machine-generated compositions will inevitably lead to discussions around copyright and ownership of musical works. Moreover, as listeners begin to accept AI-generated music, there may be a redefinition of artistic expression, challenging conventional notions about what it means to be a composer.

In this context, the relationship between technology and art will continue to evolve, creating a dynamic interplay that influences both the creation and consumption of music. The future of AI in music composition holds the potential not only for innovation but also for a reimagining of the artistic process itself.